[My Journey to CCIE Automation #5] Building Network Pipelines for Reliable Changes with pyATS & GitLab CI

My journey continues

👋 Hi, I’m Bjørnar Lintvedt

I’m a Senior Network Consultant at Bluetree, working at the intersection of networking and software development.

As mentioned in my first blog post, I’m preparing for the CCIE Automation lab exam — Cisco’s most advanced certification for network automation and programmability. I’m documenting the journey here with weekly, hands-on blog posts tied to the blueprint.

Blog post #5

After playing with Ansible in blog post #4, I wanted to move into something closer to network verification and CI/CD integration.

That’s where pyATS and GitLab CI come in.

Why pyATS?

pyATS is Cisco’s Python framework for testing and validating network devices.

It allows you to:

-

Parse command outputs into structured data

-

Learn entire device features (like routing, interfaces, logging)

-

Write automated prechecks/postchecks

-

Integrate with CI pipelines

In other words: instead of reading CLI output, your code can understand the network state.

Why GitLab CI?

GitLab CI lets us run pipelines whenever we push code or trigger jobs.

Instead of manually running prechecks on a lab or production network, we let GitLab:

-

Spin up a container with pyATS

-

Connect to our devices (from inventory/testbed files)

-

Run verification jobs

-

Collect results and make them available as artifacts

This brings DevOps-style repeatability to network engineering.

This Week’s Project

I built a Gitlab CI pipeline that have 4 stages:

- Setup

- Get's all hosts in Inventory API (Service created in previous week, see Gitlab repository), and generates a pyATS testbed file to be used by the next stages

-

Prechecks

-

Verifies syslog servers are configured

-

-

Deploy

-

Deploys the syslog config

-

-

Postchecks

-

Verifies that all the syslogs servers configured

-

The pipeline is triggered by creating a pipeline with the following variables:

The pipeline will then start to run the different stages:

For each stage you can open the logs and see in details of whats happening. As you can see, the precheck stage has a warning. By opening the precheck log you can see that some of the tests failed:

3 of 4 syslog servers that are going to be deployed in next stage are not in the current config on the network devices.

I also added so that test results are shown in Gitlab Web UI:

Implementation

Here’s the files and folder structure:

📂 pipelines/jobs/add_syslog/

-

precheck_job.py– Run prechecks using pyATS -

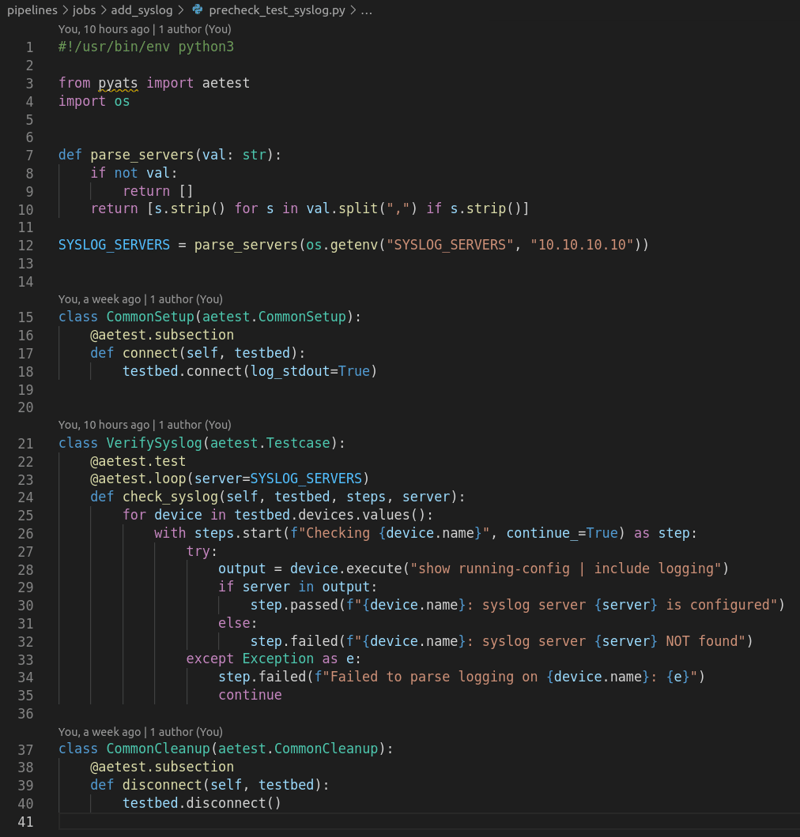

precheck_test_syslog.py– Test class verifying syslog server presence -

deploy_job.py– Deployment wrapper -

deploy_syslog.py– Actual syslog deployment logic -

postcheck_job.py– Run postchecks -

postcheck_test_syslog.py– Test verifying syslog server after deployment

Also updated:

-

.gitlab-ci.yml– Pipeline definition -

docker-compose.yml– Environment for local dev/test

How It Works

Let’s break it down step by step.

1. Gitlab CI

The .gitlab-ci.yml ties it all together, and tells how the pipeline shall be executed (I have removed some of the code to only show the relevant stuff, see repo for full file):

stages:

- setup

- precheck

- deploy

- postcheck

# This trigger should only be triggered when manually creating a pipeline in Gitlab Web UI

workflow:

rules:

- if: $CI_PIPELINE_SOURCE == "web"

when: always

- when: never

...

# Set default image to run by gitlab runner with the required software

default:

image: python:3.9-slim

before_script:

...

- apt-get update

- apt-get install -y --no-install-recommends openssh-client iputils-ping

...

- pip install --upgrade pip

- pip install -r pipelines/requirements.txt

# Setup stage runs a script that gets devices from InventoryAPI and generates a testbed file for the next stages

setup:

stage: setup

tags: ["nautix"]

variables:

DEVICE_USERNAME: $DEVICE_USERNAME

DEVICE_PASSWORD: $DEVICE_PASSWORD

SYSLOG_SERVERS: $SYSLOG_SERVERS

script:

- python pipelines/get_devices_from_inventory.py --outfile build/testbed.yml

artifacts:

paths:

- build/testbed.yml

expire_in: 1 week

# Precheck stage allows tests to fail, as it only check if any of the syslog servers are configured

precheck:

stage: precheck

needs: ["setup"]

tags: ["nautix"]

allow_failure: true

script:

- mkdir -p build/reports

- pyats run job pipelines/jobs/$JOB_NAME/precheck_job.py --testbed build/testbed.yml --xunit build/reports/precheck

artifacts:

when: always

paths:

- build/reports/

reports:

junit:

- build/reports/precheck/*.xml

# Deploy stage will configure the devices in testbed file with the syslog servers provided

deploy:

stage: deploy

needs:

- job: setup

artifacts: true

- job: precheck

tags: ["nautix"]

script:

- mkdir -p build/reports

- pyats run job pipelines/jobs/$JOB_NAME/deploy_job.py --testbed build/testbed.yml --xunit build/reports/deploy

artifacts:

when: always

paths:

- build/reports/

reports:

junit:

- build/reports/deploy/*.xml

# Postcheck stage will verify if the syslog servers are configured. It will mark the pipeline as failed if any of the syslog servers are missing

postcheck:

stage: postcheck

needs:

- job: setup

artifacts: true

- job: deploy

- job: precheck

tags: ["nautix"]

script:

- mkdir -p build/reports

- pyats run job pipelines/jobs/$JOB_NAME/postcheck_job.py --testbed build/testbed.yml --xunit build/reports/postcheck

artifacts:

when: always

paths:

- build/reports/

reports:

junit:

- build/reports/postcheck/*.xml

2. Precheck job

We first confirm whether the required syslog server is already configured. As you can also see i run different loops here, one on function level that will loop the different syslog servers provided. Then another inside that will loop all the devices from the testbed file.

3. Deployment job

This script connects to the device and pushes syslog configuration. The pyATS "test" has same build of of test as the precheck with Common Setup and Common Cleanup. But to configure I have used the "device.configure()" command.

Note: Deploy stage could easily be switched with another configuration method, e.g Netconf, Ansible etc. As the gitlab CI runs a function called "deploy_job.py", so if you change this to whatever, it should work as well

4. Postcheck job

After deployment, we re-run the same test as in precheck.

If the syslog server is still missing, the pipeline fails.

This gives a full before–after validation loop.

5. Gitlab runner

When a pipeline is triggered, a Gitlab runner picks up the task to execute the different stages of the pipeline. To be able for the gitlab runner to fetch devices from Inventory API I needed to add a Gitlab runner to my docker-compose file and run it locally. Here is an extract from the docker-compose file.

To enable the gitlab runner to communicate with the Gitlab cloud (so that it can pick up the pipeline tasks), I had to register it (See below). In addition I needed to do a "hack" so that the container, that the gitlab runner runs to execute the stages, could reach the Inventory.

# Register gitlab runner (You need to have this repo in your own gitlab account)

# GitLab instance URL: https://gitlab.com or your self-hosted URL

# Registration token: from your project/group in GitLab → Settings → CI/CD → Runners

# Description: nautix

# Executor: docker

# Default Docker image: python:3.9-slim

docker exec -it gitlab-runner gitlab-runner register

# Allow gitlab runner CI job container to talk with the Nautix services

sed -i '/\[runners.docker\]/a \ network_mode = "ccie-automation_default"' services/gitlab-runner/config/config.toml

Why This Matters

Instead of “hoping” changes were applied correctly, we now have:

✔ Automated verification before changes

✔ Automated deployment

✔ Automated verification after changes

✔ Repeatability inside GitLab

This is NetDevOps in action.

Service Interactions update

I've updated the new use case to the Nautix diagram

📅 What’s Next

Blueprint item 2.8 Use Terraform to statefully manage infrastructure, given support documentation

2.8.a Loop control

2.8.b Resource graphs

2.8.c Use of variables

2.8.d Resource retrieval

2.8.e Resource provision

2.8.f Management of the state of provisioned resources

🔗 Useful Links

- GitLab Repo – My CCIE Automation Code

- pyATS documentation

- Genie documentation

- Gitlab CI documentation

Blog series

- [My Journey to CCIE Automation – Week 1] Intro + Building a Python CLI app

- [My Journey to CCIE Automation – Week 2] Building a Inventory REST API + Docker

- [My Journey to CCIE Automation – Week 3] Orchestration API + NETCONF

- [My Journey to CCIE Automation – Week 4] Automating Network Discovery and Reports with Python & Ansible